Rob Fitzpatrick

ProgressTalk.com Sponsor

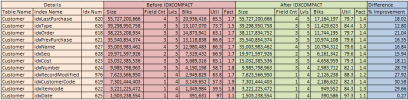

The size of the "idxCustomerCode" index on disk was significantly reduced. A table scan (e.g. for each customer use-index idxCustomerCode) would read 69.4% as many index blocks after compaction as before (2186622 / 3149652).

In production though you shouldn't specify a compaction factor of 100% as that will lead to block splits as record insertions and updates happen. That is expensive. If you specify a compaction factor of 80 then some free space will be left in the blocks for future growth, and you'll still have the benefits of compaction: improves cache hits and reduced physical I/O.

I would also test the performance of idxbuild to compare with idxcompact. You mentioned 11.6 documentation. Are you doing this work with 11.6 binaries?

In production though you shouldn't specify a compaction factor of 100% as that will lead to block splits as record insertions and updates happen. That is expensive. If you specify a compaction factor of 80 then some free space will be left in the blocks for future growth, and you'll still have the benefits of compaction: improves cache hits and reduced physical I/O.

I would also test the performance of idxbuild to compare with idxcompact. You mentioned 11.6 documentation. Are you doing this work with 11.6 binaries?