M

Mike Wooldridge

Guest

This is the final blog in our five-part series about semantic RAG, in which we show you how to integrate a chatbot into the flow and connect it to an LLM service. Be sure to check out the previous blogs on: Enhancing GenAI, The Knowledge Graph, Content Preparation and Query Orchestration.

The last step in our semantic RAG workflow is constructing the frontend that will handle the interaction with the end user. In this blog, we explore how to create a search application with an integrated AI-powered chatbot that will send the user query to the Progress MarkLogic database and return the textual answer with linked citations that we prepared in the previous step.

Visualizing and managing conversations between a user and a large language model (LLM) is a critical part of a semantic RAG application. We’re going to use Progress MarkLogic FastTrack to build the UI. FastTrack is a React UI component library like Bootstrap and Material UI. What’s special about the FastTrack components is that they work seamlessly with data stored in Progress MarkLogic Server. FastTrack exposes the Server APIs out of the box so developers can build applications much faster.

For this example, we’re going to use the FastTrack ChatBot component to build our conversational UI to manage ongoing conversations with an LLM.

The component displays an interactive console for users to directly engage with an LLM. It accepts natural-language queries, passes those queries to the backend and displays each query, response and related source information in a scrollable container.

Out of the box, the ChatBot widget handles the chat interactions using a prescribed endpoint API. If you design your middle-tier server to adhere to this API, you can implement a chat interface with zero configuration.

You can also configure the ChatBot to communicate with any HTTP endpoint you want, send any query payload to that endpoint and display responses that are transformed and formatted to your liking as an ongoing message thread. This makes the FastTrack ChatBot an easy and flexible way to manage AI-driven conversations.

The FastTrack UI widgets don’t communicate directly with your application’s middle-tier server. They communicate through methods made available by a special widget called MarkLogicContext. MarkLogicContext is responsible for turning user requests into the payloads the middle-tier server will accept, and sending the payloads via HTTP using the connection settings defined for the application (FastTrack uses the Axios library for executing HTTP calls). MarkLogicContext is also in charge of maintaining application state, such as the most recent response values and search constraints.

The following diagram shows the widgets and application code sending messages via MarkLogicContext to the middle-tier server, which communicates with MarkLogic Server and, in an AI application, an LLM.

The FastTrack ChatBot widget can use the

Below is an example request sent by the postChat method.

In a RAG application, the middle-tier server can use the query text and combined query to query MarkLogic Server. The document information it receives back can be passed on to the LLM to augment the original query text.

The ChatBot widget expects to get a response back from the backend as a specific schema. Here is an example of such a response:

The payload includes an output string that is the RAG-enhanced answer from the LLM. The response payload can also include an optional array of citations as label/ID pairs, which represent the document information that supported the answer. The widget offers a

If you’ve designed a middle-tier server that can accept the native ChatBot request and response, the FastTrack ChatBot needs zero configuration. You just need to include the widget in your UI. The following shows the React code that integrates the ChatBot widget:

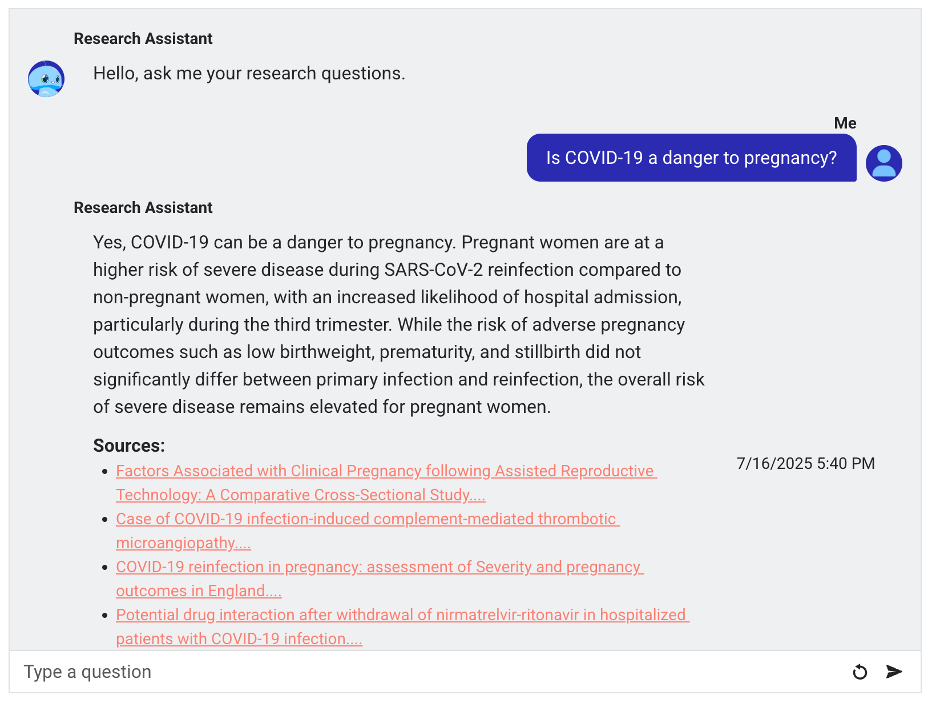

The following is rendered in the UI.

The ChatBot displays the user questions, corresponding backend responses and source information for each response.

Configuration via React props offers a low-code way of customizing the ChatBot UI. You can add props to include initial messaging, display user icons and formatted timestamps and display a reset button for clearing the messages.

The following props are available for customizing the look, feel and default content displayed in the widget.

See the ChatBot documentation for a complete list of widget props.

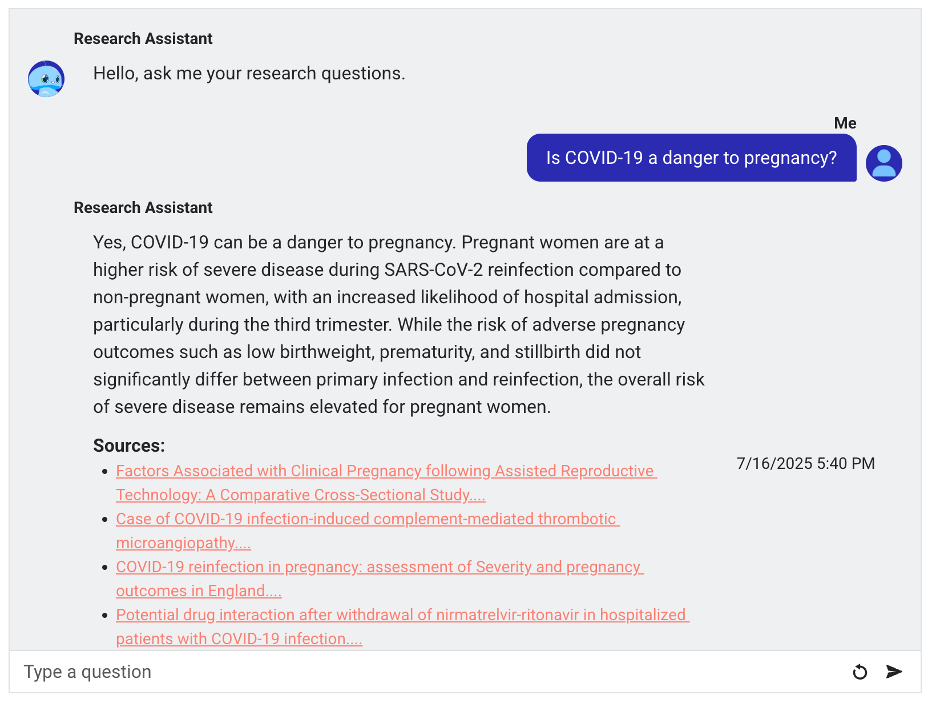

The following shows the ChatBot with UI configurations in place:

It renders the ChatBot with custom icons, a custom introductory message, formatted timestamps, truncated sources and a reset button.

In the case where your middle-tier API differs from what the FastTrack ChatBot expects, you have the option of handling the sending of messages to the backend and displaying the response content yourself. The

To include the response message in the conversation, you can set the

The

The following are the props for the custom handling of chat messages.

See the ChatBot documentation for a complete list of widget props.

Below is an example React code that performs custom handling of the chat messages. It uses

With the FastTrack ChatBot widget, developers can easily integrate AI-powered conversations into their RAG applications. The React props available let you customize the widget for common AI use cases and let you interact with the LLM of your choice. Visit the MarkLogic FastTrack page for more information.

Continue reading...

The last step in our semantic RAG workflow is constructing the frontend that will handle the interaction with the end user. In this blog, we explore how to create a search application with an integrated AI-powered chatbot that will send the user query to the Progress MarkLogic database and return the textual answer with linked citations that we prepared in the previous step.

Visualizing and managing conversations between a user and a large language model (LLM) is a critical part of a semantic RAG application. We’re going to use Progress MarkLogic FastTrack to build the UI. FastTrack is a React UI component library like Bootstrap and Material UI. What’s special about the FastTrack components is that they work seamlessly with data stored in Progress MarkLogic Server. FastTrack exposes the Server APIs out of the box so developers can build applications much faster.

For this example, we’re going to use the FastTrack ChatBot component to build our conversational UI to manage ongoing conversations with an LLM.

The component displays an interactive console for users to directly engage with an LLM. It accepts natural-language queries, passes those queries to the backend and displays each query, response and related source information in a scrollable container.

Out of the box, the ChatBot widget handles the chat interactions using a prescribed endpoint API. If you design your middle-tier server to adhere to this API, you can implement a chat interface with zero configuration.

You can also configure the ChatBot to communicate with any HTTP endpoint you want, send any query payload to that endpoint and display responses that are transformed and formatted to your liking as an ongoing message thread. This makes the FastTrack ChatBot an easy and flexible way to manage AI-driven conversations.

Sending Requests with MarkLogicContext

The FastTrack UI widgets don’t communicate directly with your application’s middle-tier server. They communicate through methods made available by a special widget called MarkLogicContext. MarkLogicContext is responsible for turning user requests into the payloads the middle-tier server will accept, and sending the payloads via HTTP using the connection settings defined for the application (FastTrack uses the Axios library for executing HTTP calls). MarkLogicContext is also in charge of maintaining application state, such as the most recent response values and search constraints.

The following diagram shows the widgets and application code sending messages via MarkLogicContext to the middle-tier server, which communicates with MarkLogic Server and, in an AI application, an LLM.

The FastTrack ChatBot widget can use the

postChat method exposed by MarkLogicContext to communicate with the chat plumbing in the backend. The postChat method receives the string query from the ChatBot input field as well as any optional arguments for customizing the request.| Argument | Type | Description |

|---|---|---|

| message | string | Message to submit to the backend. For example, a text prompt from the ChatBot widget. |

| combinedQuery | object | Optional combined query object to constrain the RAG query. |

| parameters | object | Object of key/value pairs that determine how to connect to the backend. Available keys are scheme, host, port, path, auth. If parameter values are not defined, the values from MarkLogicProvider are used. The default path is /api/fasttrack/chat. |

| config | AxiosRequestConfig | An optional configuration object defining the HTTP request. |

Below is an example request sent by the postChat method.

Code:

POST http://example.org:4000/api/fasttrack/chat

{

"query": "Is COVID a danger for pregnant women?",

"combinedQuery": {

"query": // structured query

"qtext": // query text

"options": // query options

}

}In a RAG application, the middle-tier server can use the query text and combined query to query MarkLogic Server. The document information it receives back can be passed on to the LLM to augment the original query text.

Receiving a Response from the LLM

The ChatBot widget expects to get a response back from the backend as a specific schema. Here is an example of such a response:

Code:

{

"output": "Yes, COVID-19 poses a danger to pregnant women. The study on COVID-19

reinfection in pregnancy indicates that pregnant women have a higher risk of

severe disease during reinfection compared to non-pregnant women.",

"citations": [

{

"citationLabel": "A Nationwide Study of COVID-19 Infection.",

"citationId": "/content/39733771.xml”

},

{

"citationLabel": "COVID-19 reinfection in pregnancy",

"citationId": "/content/39733828.xml”

}

]

}The payload includes an output string that is the RAG-enhanced answer from the LLM. The response payload can also include an optional array of citations as label/ID pairs, which represent the document information that supported the answer. The widget offers a

responseTransform prop that lets you change this structure or the content prior to display.ChatBot with Minimal Configuration

If you’ve designed a middle-tier server that can accept the native ChatBot request and response, the FastTrack ChatBot needs zero configuration. You just need to include the widget in your UI. The following shows the React code that integrates the ChatBot widget:

Code:

import { ChatBot } from "ml-fasttrack";

import './ChatBotAssistant.css';

export const ChatBotAssistant = () => {

return (

<ChatBot />

)

}The following is rendered in the UI.

The ChatBot displays the user questions, corresponding backend responses and source information for each response.

Customizing the ChatBot UI

Configuration via React props offers a low-code way of customizing the ChatBot UI. You can add props to include initial messaging, display user icons and formatted timestamps and display a reset button for clearing the messages.

The following props are available for customizing the look, feel and default content displayed in the widget.

| Prop | Type | Description |

|---|---|---|

| user | User | Object representing the user participant in the chat. See the KendoReact UserProps. |

| bot | User | Object representing the bot participant in the chat. See the KendoReact UserProps. |

| messages | Message[] | Represents an array of chat messages, including any initial messages. See the KendoReact MessageProps. |

| timestampFormat | string | Format for the timestamp of the chat messages. The default is M/d/y h:mm:ss a. |

| placeholder | string | Placeholder text for the message input field when it is empty. |

| width | string | number | Sets the width of the ChatBot. |

| sourceTruncation | number | Number of characters to display for each source item label. If the source label text exceeds the truncation value, it is truncated and an ellipsis is added. |

| noResponseText | string | Text to display when the bot has no response to the user message. This is displayed as a chat message. |

| className | string | Class name applied to the widget for CSS formatting. |

| showRestart | boolean | Whether the restart button to clear the conversation is rendered. The default is false . |

| showSources | boolean | Whether source citations for bot responses are displayed in the chat. Default: true |

See the ChatBot documentation for a complete list of widget props.

The following shows the ChatBot with UI configurations in place:

Code:

import { useState } from "react";

import { ChatBot } from "ml-fasttrack";

import './ChatBotAssistant.css';

export const ChatBotAssistant = (props) => {

const bot = {

id: 0,

name: "Research Assistant",

avatarUrl: "/chatbot.png"

}

const user = {

id: 1,

name: "Me",

avatarUrl: "/user.png"

}

const initialMessages = [

{

author: bot,

timestamp: new Date(),

text: "Hello, ask me your research questions.",

}

];

const [messages, setMessages] = useState(initialMessages);

return (

<ChatBot

bot={bot}

user={user}

messages={messages}

placeholder="Type a question"

sourceTruncation={300}

showRestart={true}

/>

)

}It renders the ChatBot with custom icons, a custom introductory message, formatted timestamps, truncated sources and a reset button.

Custom Request and Response Handling

In the case where your middle-tier API differs from what the FastTrack ChatBot expects, you have the option of handling the sending of messages to the backend and displaying the response content yourself. The

onMessageSend prop takes a callback that receives the user query in an event object. You can send that user query to any endpoint you like with the callback.To include the response message in the conversation, you can set the

customBotResponse prop to true and add the RAG response to the messages array yourself. Any transformation of the content and formatting is up to you.The

onSourceClick prop lets you handle citation clicks. You can display source content in a window or some other display method.The following are the props for the custom handling of chat messages.

| Prop | Type | Description |

|---|---|---|

| responseTransform | function | Callback function for transforming the chat response. Accepts the chat response and returns a transformed response to be used by the widget. |

| onMessageSend | ((event: any) => void) | Callback function triggered when the user types a message and clicks the Send button or presses Enter. |

| onSourceClick | ((event: any, sourceId: any) => void) | Callback function triggered when a source is clicked. The function can receive the event and respective source ID as arguments. |

| customBotResponse | booleanFalse | Boolean value which controls whether to use the widget's default bot response or not. When set to true, create a custom method to update the 'messages' object with your ideal bot response. The default is false. |

See the ChatBot documentation for a complete list of widget props.

Below is an example React code that performs custom handling of the chat messages. It uses

onMessageSend to execute the postChat method for sending queries to the default RAG backend (but it could just as well call any custom endpoint). It also transforms and formats the LLM response and includes a callback for handling source clicks.

Code:

import { useState, useContext } from "react";

import { ChatBot, MarkLogicContext } from "ml-fasttrack";

import axios from 'axios';

import './ChatBotAssistant.css';

export const ChatBotAssistant = (props) => {

// See previous...

const context = useContext(MarkLogicContext);

const handleRestart = async () => {

try {

await axios.get(import.meta.env.VITE_CLEAR_URL)

.then(res => {

if (res.status === 200) {

console.log('ChatBot restarted');

}

})

} catch (err) {

console.error(err);

}

};

const formatResponse = (response) => {

console.log('formatResponse', response);

return (

<div>

<p>{response.output.replace(/ *\[[^)]*\] */g, "")

.concat(" (Powered by FastTrack)")}</p>

<ul>

{response.citations.map((citation, index) => (

<li

key={index}

onClick={() => props.onSourceClick(event, citation.citationId)}

className="source-link"

>

{index + 1}. {citation.citationLabel}

</li>

))}

</ul>

</div>

)

};

const addNewMessage = async (event) => {

const latestUserMessage = event.message;

// Add user message and then show loading indicator

setMessages((oldMessages) => [...oldMessages, event.message]);

setMessages((oldMessages) => [...oldMessages,

{

author: bot,

typing: true,

}

]);

await context.postChat(latestUserMessage.text)

.then((response) => {

setMessages((oldMessages) => [...oldMessages,

{

author: bot,

timestamp: new Date(),

text: formatResponse(response),

}

]

)});

};

return (

<ChatBot

bot={bot}

user={user}

messages={messages}

placeholder="Type a question"

sourceTruncation={300}

showRestart={true}

onMessageSend={addNewMessage}

onSourceClick={(event, sourceId) => props.onSourceClick(event, sourceId)}

onRestart={handleRestart}

customBotResponse={true}

/>

)

}Conclusion

With the FastTrack ChatBot widget, developers can easily integrate AI-powered conversations into their RAG applications. The React props available let you customize the widget for common AI use cases and let you interact with the LLM of your choice. Visit the MarkLogic FastTrack page for more information.

Continue reading...