George Potemkin

Member

Dear Santa(s),

I have been rather bad this year. So YouTube had recommended me to watch Gus Bjorklund presentation - "Time And How To Get Rid Of it -- Revisited".

The presentation raised some questions in my poor head but I can't find the answers on my own. For Christmas, can I get the answers to my questions to have a happy empty mind as it was before?

In addition, I have the detailed statistics for a couple of ATM tests. The tests used to be a way to get the answers. Unfortunately, the test scenario wasn’t mine and their results only raised more questions.

Gus' presentation:

“we're interesting in how long the transactions take”

ATM transaction duration

slow 82 ms (-spin 5,000)

fast 31 ms (-spin 50,000)

IMHO, we also need to know a transaction duration in a single-user test where there are no resource competition. It can be used as a baseline. I bet the result will surprise. The number of sessions run in a test significantly affects its result. Maybe more significantly than the spin value. If you would like to get the shortest transaction duration /and/ the maximal number of the commits per second then the best thing to do is to find out the optimal number of the sessions to run.

Is the optimal number of the sessions determined by the number of CPU threads? I bet NO. My guess - the optimal number is much smaller. Non testatum. For a record - In Gus’ tests the number of the concurrent users was 150 and the number of CPU threads was 16 (4 quad-core 2.4 GHz intel processors).

At the end of the presentation Gus said:

“The other thing that happens as you're using these CPU cycles is the operating system eventually is going to say okay you've had the CPU long enough, it's time to give somebody else a chance. So you get stopped and somebody else gets scheduled. Well, if that other person who's scheduled wants the same lock that you have and you stopped so you can't release it then you could end up in a situation where 100% of the CPU goes to all the people who are trying to get the lock and the lock holder gets starved other.”

We can estimate how likely such a situation (an inactive process owns a latch) is. My estimation – the chances are low.

But there is another aspect of the same thing. A quantum or CPU time slice (the period of time a scheduler allows a particular process to be served by CPU) is about 10-100 ms. The transaction durations in ATM tests is of the same order as a quantum. If a transaction is not committed during a quantum then the out of service period will be added to the transaction duration. We will get the significant jumps in transaction durations.

Gus wrote:

“What do we learn from all this?

3) -spin should be higher than we thought”

The optimal spin value in Gus’ tests is 50,000.

How about the best practice: “-spin should be set as a starting point to pi * birth year of DBA”?

Why the optimal value of the spin differs between ATM and readprobe tests? As far as I understand, the optimal spin value is when a process is spinning about the same time as the latch is locked by other process. So if we are forced to use the higher value of the spin, the one of the latches is probably locked longer than we thought. The question is: which latch? Are there any direct evidence of the long latch locks? Are there any theoretical explanation of this? Gus seems to say the opposite:

“Latches are typically held for very short times.

maybe as little as 100 nanoseconds on modern computers

btw: with 2.4 GHz processor, 200 nsec is 479 clocks”

Pathologists know: MTX latch can be owned by a process while it is writing to a disk. Disk IO is a slow operation. But during ATM tests the most (if not all) of MTX locks happen outside disk operations. Even MTX locks should stay short.

The total time while a process locks any latches is a very tiny part of the transaction duration. Other phases of transaction processing are much longer. The time of these “latch free” phases may vary randomly due to the different factors and these variations, IMHO, can be longer than a phase when a process is using the latches.

The optimal spin value on more /powerful/ server than the one used by Gus is 500,000 rather than 50,000! “More powerful” = more CPUs.

-spin: “On multi-processor machines, the default value is 6000 * # of CPUs”

The common opinion seemed to be “the default value is wrong”.

Is this opinion wrong?

Gus’ server: 4 quad-core 2.4 GHz intel processors

I have some results for the server: Intel Xeon Processor E7 v4 Family 2.80 GHz (max turbo frequency: 3.50 GHz), CPU threads (according cpuinfo): 80

Unfortunately, I have the detailed statistics only for the preliminary tests on this server that were run with:

The concurrent users: 150 and 4

-spin 10000

-nap 10

-napmax 250

The team of 4 users is 4 times more efficient than 150 sessions! One user from the magnificent four can beat the whole band of 150 sessions! But 8 sessions create almost te same number of transactions as 4 sessions. The power of the small teams quickly weakens. To remind: CPU threads is 80, -spin 10,000.

Of course, 4 users create the shortest transactions.

What was the bottleneck for 150 sessions? Of course, the naps and waits. But I would like to know the name of the concrete resource.

The tests prove – the spin value does matter (as well as the number of users):

Does the spin value optimize the access namely to the MTX latch? Of course, MTX latch got the most naps. But it would be better to check how busy the latches are. I did it for the 150 user test with the spin 10,000: MTX latch was busy only 14% of the time. Every transaction in ATM tests locks the latch exactly 8 times and forces to nap on the latch 0.30 times in average – 0.04 naps per latch lock. Is it enough to blame the MTX latch for the bad results in the test? I’m not sure. Whom if it’s not MTX?

How busy the latches when the spin value is optimal? Unfortunately, I don’t have data from the tests with the large spin.

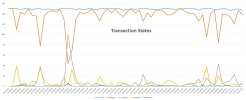

Moreover, the transactions spend in the “begin” status 85% (150 users) and 77% (5 users) of its duration, in other words, the most of the time they are not trying to update a database! Correspondently, the “active” phase lasts only 10 ms and 0.1 ms in the absolute numbers. Bad application code can keep the transactions in the “begin” status as long as you want but it’s not the case for the code used in ATM test. Why the begin phase of the simple transactions is their longest part?

“Phase 2” phase that is a rudiment of the forgotten Two-Phase Commit feature is 3 times shorted than the Active phase (150 users test). Nevertheless, it’s almost 10 times longer than a whole transaction in the 5 users test. What database resources are used during “phase 2”? Why the “phase 2” is not instant when 2PC is not enabled?

“None” status is very short – about 0.3% of transaction duration but they are still visible. Progress seems to do “nothing” during the “none” phase but it still takes some time.

Some data from the tests with 150 and 5 users as well as the additional questions are at the post below.

I have been rather bad this year. So YouTube had recommended me to watch Gus Bjorklund presentation - "Time And How To Get Rid Of it -- Revisited".

The presentation raised some questions in my poor head but I can't find the answers on my own. For Christmas, can I get the answers to my questions to have a happy empty mind as it was before?

In addition, I have the detailed statistics for a couple of ATM tests. The tests used to be a way to get the answers. Unfortunately, the test scenario wasn’t mine and their results only raised more questions.

Gus' presentation:

“we're interesting in how long the transactions take”

ATM transaction duration

slow 82 ms (-spin 5,000)

fast 31 ms (-spin 50,000)

IMHO, we also need to know a transaction duration in a single-user test where there are no resource competition. It can be used as a baseline. I bet the result will surprise. The number of sessions run in a test significantly affects its result. Maybe more significantly than the spin value. If you would like to get the shortest transaction duration /and/ the maximal number of the commits per second then the best thing to do is to find out the optimal number of the sessions to run.

Is the optimal number of the sessions determined by the number of CPU threads? I bet NO. My guess - the optimal number is much smaller. Non testatum. For a record - In Gus’ tests the number of the concurrent users was 150 and the number of CPU threads was 16 (4 quad-core 2.4 GHz intel processors).

At the end of the presentation Gus said:

“The other thing that happens as you're using these CPU cycles is the operating system eventually is going to say okay you've had the CPU long enough, it's time to give somebody else a chance. So you get stopped and somebody else gets scheduled. Well, if that other person who's scheduled wants the same lock that you have and you stopped so you can't release it then you could end up in a situation where 100% of the CPU goes to all the people who are trying to get the lock and the lock holder gets starved other.”

We can estimate how likely such a situation (an inactive process owns a latch) is. My estimation – the chances are low.

But there is another aspect of the same thing. A quantum or CPU time slice (the period of time a scheduler allows a particular process to be served by CPU) is about 10-100 ms. The transaction durations in ATM tests is of the same order as a quantum. If a transaction is not committed during a quantum then the out of service period will be added to the transaction duration. We will get the significant jumps in transaction durations.

Gus wrote:

“What do we learn from all this?

3) -spin should be higher than we thought”

The optimal spin value in Gus’ tests is 50,000.

How about the best practice: “-spin should be set as a starting point to pi * birth year of DBA”?

Why the optimal value of the spin differs between ATM and readprobe tests? As far as I understand, the optimal spin value is when a process is spinning about the same time as the latch is locked by other process. So if we are forced to use the higher value of the spin, the one of the latches is probably locked longer than we thought. The question is: which latch? Are there any direct evidence of the long latch locks? Are there any theoretical explanation of this? Gus seems to say the opposite:

“Latches are typically held for very short times.

maybe as little as 100 nanoseconds on modern computers

btw: with 2.4 GHz processor, 200 nsec is 479 clocks”

Pathologists know: MTX latch can be owned by a process while it is writing to a disk. Disk IO is a slow operation. But during ATM tests the most (if not all) of MTX locks happen outside disk operations. Even MTX locks should stay short.

The total time while a process locks any latches is a very tiny part of the transaction duration. Other phases of transaction processing are much longer. The time of these “latch free” phases may vary randomly due to the different factors and these variations, IMHO, can be longer than a phase when a process is using the latches.

The optimal spin value on more /powerful/ server than the one used by Gus is 500,000 rather than 50,000! “More powerful” = more CPUs.

-spin: “On multi-processor machines, the default value is 6000 * # of CPUs”

The common opinion seemed to be “the default value is wrong”.

Is this opinion wrong?

Gus’ server: 4 quad-core 2.4 GHz intel processors

I have some results for the server: Intel Xeon Processor E7 v4 Family 2.80 GHz (max turbo frequency: 3.50 GHz), CPU threads (according cpuinfo): 80

Unfortunately, I have the detailed statistics only for the preliminary tests on this server that were run with:

The concurrent users: 150 and 4

-spin 10000

-nap 10

-napmax 250

Code:

Activity: Resource Queues

150 sessions 4 sessions

Resource Queue Requests Waits Requests Waits (all per sec)

TXE Commit Lock 2164 209 8181 6

Code:

150 sessions 4 sessions

Tx duration 69 ms 0.44 ms

Summary.log:

Cl Time Trans Tps Conc Avg R Min R 50% R 90% R 95% R Max R

--- ---- -------- -------- ----- ----- ----- ----- ----- ----- -----

150 600 1435891 2393.2 149.9 0.1 0.0 0.0 0.2 0.2 3.4

4 600 4895286 8158.8 3.8 0.0 0.0 0.0 0.0 0.0 12.8What was the bottleneck for 150 sessions? Of course, the naps and waits. But I would like to know the name of the concrete resource.

The tests prove – the spin value does matter (as well as the number of users):

Code:

Clients spin bibufs B TPS Tx Durations (ms)

4 10,000 100 4,194,304 8,181 0.49 (have the detailed stats)

100 480,000 64 4,194,304 13,984 7.15

100 480,000 100 4,194,304 13,491 7.41

100 480,000 64 4,194,304 13,405 7.46

20 400,000 120 8,388,608 13,160 1.52

100 480,000 100 8,388,608 12,737 7.85

100 400,000 120 8,388,608 12,560 7.96

80 400,000 120 8,388,608 12,395 6.45

100 100,000 64 4,194,304 12,155 8.23

100 300,000 100 8,388,608 11,638 8.59

100 120,000 64 4,194,304 11,022 9.07

100 150,000 64 4,194,304 10,166 9.84

100 200,000 64 4,194,304 9,735 10.27

100 20,000 64 4,194,304 5,406 18.50

100 10,000 64 4,194,304 3,006 33.27

150 10,000 100 4,194,304 2,164 67.01 (have the detailed stats)How busy the latches when the spin value is optimal? Unfortunately, I don’t have data from the tests with the large spin.

Moreover, the transactions spend in the “begin” status 85% (150 users) and 77% (5 users) of its duration, in other words, the most of the time they are not trying to update a database! Correspondently, the “active” phase lasts only 10 ms and 0.1 ms in the absolute numbers. Bad application code can keep the transactions in the “begin” status as long as you want but it’s not the case for the code used in ATM test. Why the begin phase of the simple transactions is their longest part?

“Phase 2” phase that is a rudiment of the forgotten Two-Phase Commit feature is 3 times shorted than the Active phase (150 users test). Nevertheless, it’s almost 10 times longer than a whole transaction in the 5 users test. What database resources are used during “phase 2”? Why the “phase 2” is not instant when 2PC is not enabled?

“None” status is very short – about 0.3% of transaction duration but they are still visible. Progress seems to do “nothing” during the “none” phase but it still takes some time.

Some data from the tests with 150 and 5 users as well as the additional questions are at the post below.

Last edited: