JamesBowen

19+ years progress programming and still learning.

In the past I've applied schema changes from Windows (WebSpeed Workshop for Windows) and the UTF-8 database is located on Linux. Historically you can't access a UTF-8 database in shared memory mode on Linux because of the fear of corruption, unless it is accessed in batch mode only.

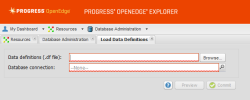

Now I have my application and database 'in the cloud'. How do I apply my schema changes to my database if I can't use the CHUI Data Administrator tool? Is there a batch tool to apply schema delta file?

INFO: OE 11.3.2

OS: CentOS 6.5 64bit

Now I have my application and database 'in the cloud'. How do I apply my schema changes to my database if I can't use the CHUI Data Administrator tool? Is there a batch tool to apply schema delta file?

INFO: OE 11.3.2

OS: CentOS 6.5 64bit