D

Drew Wanczowski

Guest

This is part four of our series about semantic RAG, in which we show you how to orchestrate a multi-model query pipeline to discover the most relevant information. Before you dive in, be sure to check out Part One: Enhancing GenAI with Multi-Model Data and Knowledge Graphs, Part Two: The Knowledge Graph and Part Three: Content Preparation.

Step three in our semantic RAG workflow is content discovery. Content discovery is the process of finding relevant information in a large corpus of data. Part of the content discovery process is determining the key concepts within a user’s question, discovering relevant chunks of information pertinent to the question and preparing a prompt for the LLM.

By intercepting the user’s question, key concepts can be extracted using the power of knowledge graphs, much like the content preparation workflow. These concepts then guide a relevance-based search across the text corpus to locate the most relevant information. To enhance accuracy, vector capabilities are employed for reranking results and identifying semantically similar content. Finally, prompt preparation involves embedding these curated chunks into the context of the LLM, enabling it to leverage proprietary knowledge effectively when generating responses. Let’s take a look at that process step by step.

In the following examples, we will be showing Java code snippets that utilize the Progress MarkLogic, Progress Semaphore and LangChain4j SDKs. This will allow us to interact with the MarkLogic, Semaphore and Azure OpenAI components. You can place these calls in a middle-tier application that exposes an API for your end users.

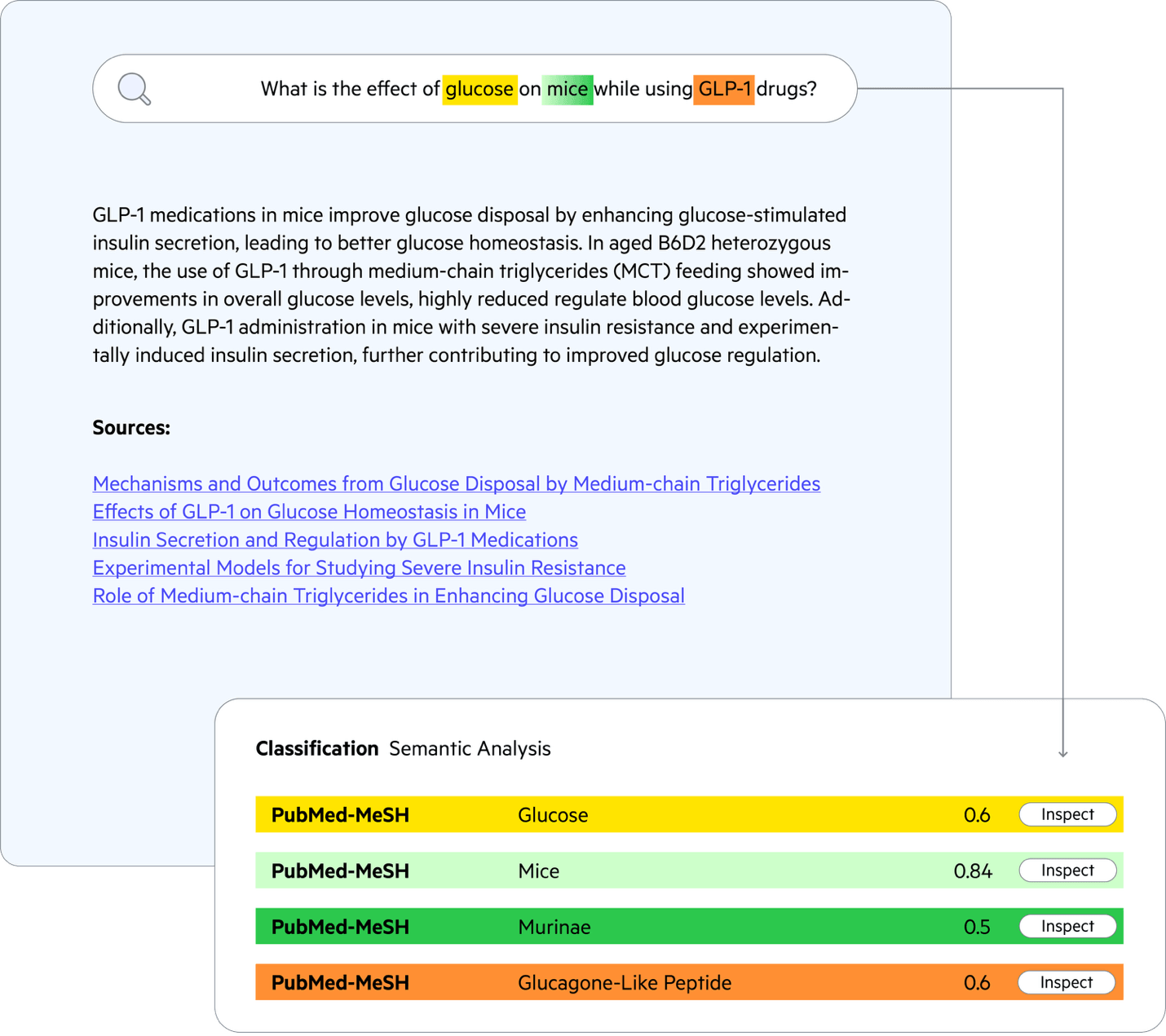

Image 1: Workflow illustrating how a user submits a question, the question is analyzed with Semaphore, it finds content in MarkLogic, the prompt is prepared and then it’s sent to the LLM.

While you can interact with the GenAI directly using the user’s question, we will be intercepting the question to better understand their intent. The same Semaphore classification process we used earlier to find the key concepts in our chunks may be used to find concepts in the user’s question. The concept labels will be used as a lexical search to find items within your corpus of data—while the identifiers will be used to determine the classified content based on supporting evidence.

We will first create a classification client to use across our application. We are using Spring Framework to read values from an application properties file. However, you can access these values using whatever method you see fit.

Once a classification client is established, creating a classification request is easy. This request will contain the text from the chunk and a blank title.

The Semaphore response will be automatically marshalled into a Java object for you. You can access all the concepts from this result.

With these identified concepts, we can start to build a query. We will take each of the concepts and construct a combination of a lexical word search and an identifier search against content, so only content that has been classified is matched.

We now have a well-structured query based on the user’s intent. Next, we will build a composable query to find the relevant chunks with the MarkLogic Optic API. It can search all forms of data stored in MarkLogic Server . By default, MarkLogic Server utilizes a scoring algorithm of Term Frequency/Inverse Document Frequency (TF-IDF) to score and order results.

As you see in the query above, we are using a relevance-based search. MarkLogic Server 12 has introduced two new features to aid in search. Best Match 25 (BM25) and native vector search are available to enhance your experience.

BM25 is a relevance ranking algorithm like TF-IDF used in lexical queries. BM25 takes into consideration several facets such as term frequency and document length. BM25 also has a tunable parameter for weight when taking the document length into consideration.

Vector search allows you to query the vector embeddings stored in MarkLogic Server. To support vector search, MarkLogic Server has introduced a specialized vector index along with ANN (Approximate Nearest Neighbor) search. ANN is a fast and efficient way to find content based on the similarity of the vectors.

In this example, vector search is used along with lexical search. We can take a vectorization of the user’s question and search for content based on ANN. There is now a full outer join in place to combine both vector and lexical search. This allows you to find content that may be missed by one of the methods. A hybrid score is created based on the vector score and lexical score. This combined relevancy score is used to re-rank the result, placing the most relevant at the top of the search results.

This hybrid search approach allows you to build your retrieval flow to find both semantically similar concepts and exact matches like proper names and labels. This surfaces the most relevant documents to inform the output of the generative model.

KNN (k-nearest neighbors**)** is an exact method that finds the closest points to a query, but it can be slower for larger datasets. KNN leverages algorithms such as Euclidian distance or Cosine distance to determine the closet point. In certain cases, you may prefer precision over efficiency.

We will take a similar approach to the example above. However, note that in this example, the lexical search is executed first and then the items are re-ranked using the vector score.

In systems with larger datasets, it is a good approach to pre-narrow the items you are comparing. The hybrid approach here works well by filtering down the content with the lexical matches.

Reciprocal Rank Fusion or RRF is a method of combining scores from different algorithms. In our example, we use BM25 to determine relevance scores for lexical searches and cosine distance to calculate the similarity between vectors. By themselves, these values are incompatible. RRF takes these scores and combines them in a way that allows items to be sorted. Items that appear in both results sets will appear higher.

The MarkLogic Server vector score function allows for more granular control on inputs. In the code example above, we label it as a “hybrid” score. The vector score formula enhances the lexical-based score by incorporating vector similarity, specifically boosting scores for documents with higher cosine similarity. This approach prioritizes semantically similar documents in search results and is used as an alternative to the RRF method for combining search results.

The final part of the RAG workflow will be to execute the query with the GenAI. We will build a new prompt that utilizes our semantic knowledge graph for new terminology and the chunks discovered as part of the search. This will enhance the context for the LLM and provide a more accurate and traceable response.

The search responses need to be converted into a RowSet that LangChain4j can interpret.

The incoming user query is handled in a controller and leverages the LangChain4j query assistant to add your internal knowledge into the LLM’s context.

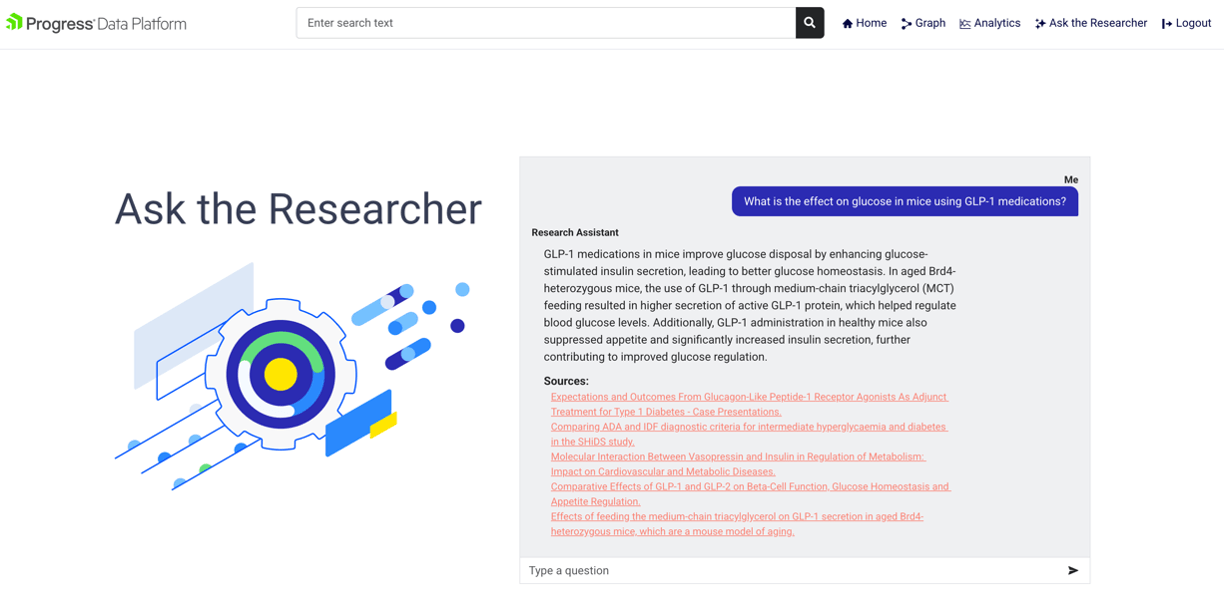

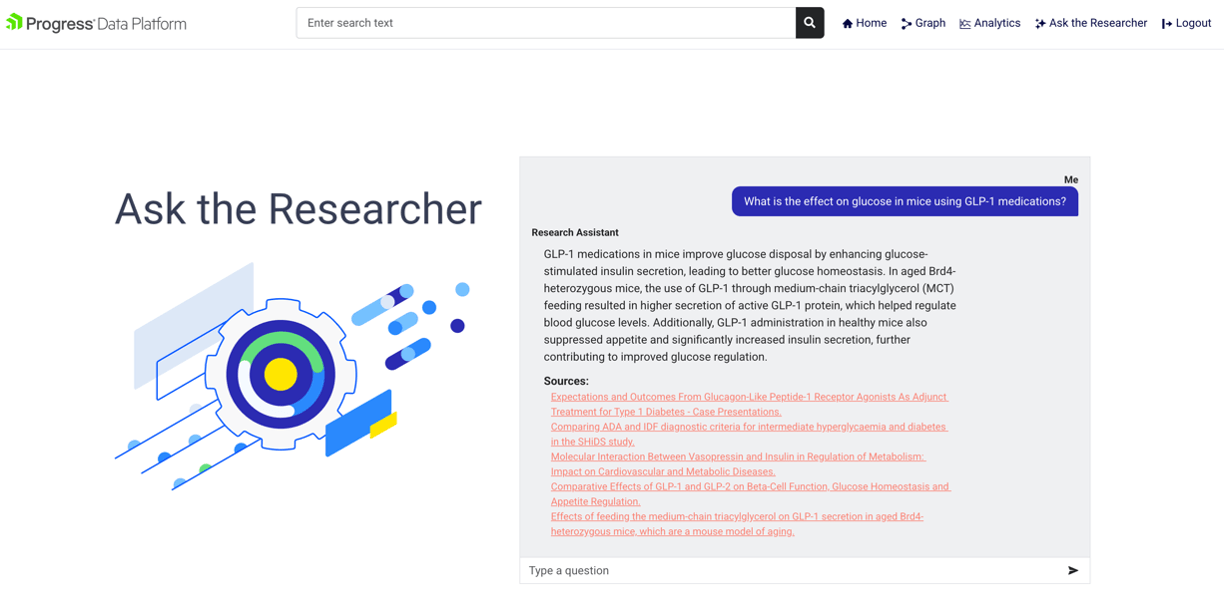

The final result provides a textual answer to the question along with citational links for the content used to answer the question.

Image 2: Results of hybrid search and use of private data with the GenAI

In the next blog, we’re going to look at how to integrate this flow with the chatbot in your React application’s middle tier.

To explore the full process for designing a RAG workflow to enhance the accuracy of your LLM responses, download our Semantic RAG whitepaper.

Continue reading...

Step three in our semantic RAG workflow is content discovery. Content discovery is the process of finding relevant information in a large corpus of data. Part of the content discovery process is determining the key concepts within a user’s question, discovering relevant chunks of information pertinent to the question and preparing a prompt for the LLM.

By intercepting the user’s question, key concepts can be extracted using the power of knowledge graphs, much like the content preparation workflow. These concepts then guide a relevance-based search across the text corpus to locate the most relevant information. To enhance accuracy, vector capabilities are employed for reranking results and identifying semantically similar content. Finally, prompt preparation involves embedding these curated chunks into the context of the LLM, enabling it to leverage proprietary knowledge effectively when generating responses. Let’s take a look at that process step by step.

Discovering Content and GenAI Q&A

In the following examples, we will be showing Java code snippets that utilize the Progress MarkLogic, Progress Semaphore and LangChain4j SDKs. This will allow us to interact with the MarkLogic, Semaphore and Azure OpenAI components. You can place these calls in a middle-tier application that exposes an API for your end users.

Image 1: Workflow illustrating how a user submits a question, the question is analyzed with Semaphore, it finds content in MarkLogic, the prompt is prepared and then it’s sent to the LLM.

Understanding the User’s Intent

While you can interact with the GenAI directly using the user’s question, we will be intercepting the question to better understand their intent. The same Semaphore classification process we used earlier to find the key concepts in our chunks may be used to find concepts in the user’s question. The concept labels will be used as a lexical search to find items within your corpus of data—while the identifiers will be used to determine the classified content based on supporting evidence.

We will first create a classification client to use across our application. We are using Spring Framework to read values from an application properties file. However, you can access these values using whatever method you see fit.

Code:

TokenFetcher tokenFetcher = new TokenFetcher(this.semaphoreConfig.getTokenRequestURL(), this.semaphoreConfig.getKey());

Token token = tokenFetcher.getAccessToken();

ClassificationClient classificationClient = new ClassificationClient();

ClassificationConfiguration classificationConfiguration = new ClassificationConfiguration();

classificationConfiguration.setUrl(this.semaphoreConfig.getCsURL());

classificationConfiguration.setApiToken(token.getAccess_token());

classificationClient.setClassificationConfiguration(classificationConfiguration);Once a classification client is established, creating a classification request is easy. This request will contain the text from the chunk and a blank title.

classificationClient.getClassifiedDocument(new Body(question, new Title(""));The Semaphore response will be automatically marshalled into a Java object for you. You can access all the concepts from this result.

Code:

LOGGER.info("---------Start Concepts identified----------");

for (Map.Entry<String, Collection<ClassificationScore>> entry : concepts.entrySet()) {

LOGGER.info(entry.getKey() + ":");

for (ClassificationScore classificationScore : entry.getValue()) {

LOGGER.info(String.format(" %s %s %s %f",

classificationScore.getRulebaseClass(),

classificationScore.getId(),

classificationScore.getName(),

classificationScore.getScore()

));

}

}

LOGGER.info("---------Ending Concepts identified---------");With these identified concepts, we can start to build a query. We will take each of the concepts and construct a combination of a lexical word search and an identifier search against content, so only content that has been classified is matched.

Code:

Result result = classificationClient.getClassifiedDocument(new Body(query.text()), new Title(BLANK));

// Convert these concepts into a MarkLogic query to find relevant chunks.

List<CtsQueryExpr> queryExprList = new ArrayList<>();

for (Map.Entry<String, Collection<ClassificationScore>> entry : result.getAllClassifications().entrySet()) {

for (ClassificationScore classificationScore : entry.getValue()) {

queryExprList.add(op.cts.wordQuery(classificationScore.getName(), "case-insensitive", "punctuation-insensitive", "lang=en"));

queryExprList.add(op.cts.jsonPropertyValueQuery("id", classificationScore.getId()));

}

}Finding Relevant Content

We now have a well-structured query based on the user’s intent. Next, we will build a composable query to find the relevant chunks with the MarkLogic Optic API. It can search all forms of data stored in MarkLogic Server . By default, MarkLogic Server utilizes a scoring algorithm of Term Frequency/Inverse Document Frequency (TF-IDF) to score and order results.

Code:

// Convert these concepts into a MarkLogic query to find relevant chunks.

List<CtsQueryExpr> queryExprList = new ArrayList<>();

for (Map.Entry<String, Collection<ClassificationScore>> entry : result.getAllClassifications().entrySet()) {

for (ClassificationScore classificationScore : entry.getValue()) {

queryExprList.add(op.cts.wordQuery(classificationScore.getName(), "case-insensitive", "punctuation-insensitive));

queryExprList.add(op.cts.jsonPropertyValueQuery("id", classificationScore.getId()));

}

}

// Build the query to only look at chunks of documents that have been loaded.

CtsQueryExpr andQuery = op.cts.andQuery(

op.cts.collectionQuery(DocumentConstants.COLLECTION_CHUNK),

op.cts.orQuery(queryExprList.toArray(CtsQueryExpr[]::new))

);

// The following query plan searches for the matching fragments utilizing the key concepts that have been

// extracted from the knowledge graph.

PlanBuilder.ModifyPlan plan =

op.fromSearchDocs(andQuery)

.orderBy(op.desc(op.col("score")))

.limit(10);Hybrid Search using Lexical Search and Vector Search

As you see in the query above, we are using a relevance-based search. MarkLogic Server 12 has introduced two new features to aid in search. Best Match 25 (BM25) and native vector search are available to enhance your experience.

BM25 is a relevance ranking algorithm like TF-IDF used in lexical queries. BM25 takes into consideration several facets such as term frequency and document length. BM25 also has a tunable parameter for weight when taking the document length into consideration.

Vector search allows you to query the vector embeddings stored in MarkLogic Server. To support vector search, MarkLogic Server has introduced a specialized vector index along with ANN (Approximate Nearest Neighbor) search. ANN is a fast and efficient way to find content based on the similarity of the vectors.

In this example, vector search is used along with lexical search. We can take a vectorization of the user’s question and search for content based on ANN. There is now a full outer join in place to combine both vector and lexical search. This allows you to find content that may be missed by one of the methods. A hybrid score is created based on the vector score and lexical score. This combined relevancy score is used to re-rank the result, placing the most relevant at the top of the search results.

Code:

// Build an Optic Query Plan to find the relevant chunks. MarkLogic utilizes TF/IDF by default.

// However, you can configure it to utilize BM25

PlanSearchOptions planSearchOptions = op.searchOptions()

.withScoreMethod(PlanSearchOptions.ScoreMethod.BM25)

.withBm25LengthWeight(0.5);

// The following query plan searches for the matching fragments utilizing the key concepts that have been

// extracted from the knowledge graph. It joins in a view that contains the vector embedding for the chunk

// and re-ranks the results utilizing a hybrid score of cosine similarity and the BM25 score.

// Build the lexical search plan

PlanBuilder.ModifyPlan lexicalSearchPlan =

op.fromSearchDocs(andQuery, null, planSearchOptions)

.limit(LEXICAL_LIMIT)

// Fetch the vector embedding for the user's query to re-rank the search results

Response<Embedding> response = this.embeddingModel.embed(query.text());

Map<String, Object> options = new HashMap<>();

PlanBuilder.ModifyPlan vectorSearchPlan =

op.fromView("GenAI", "Chunks", null, op.fragmentIdCol("vectorsDocId"))

.joinDocUri(op.col("uri"), op.fragmentIdCol("vectorsDocId"))

.annTopK(

TOP_K_COUNT,

op.col("embedding"),

op.vec.vector(op.xs.floatSeq(response.content().vector())),

op.col("distance"),

options)

.orderBy(op.asc(op.col("distance")));

// Set default values in case a document is found in only one plan.

ServerExpression scoreBinding = op.caseExpr(op.when(op.isDefined(op.col("score")), op.col("score")), op.elseExpr(op.xs.longVal(0L)));

ServerExpression distanceBinding = op.caseExpr(op.when(op.isDefined(op.col("distance")), op.col("distance")), op.elseExpr(op.xs.longVal(2L)));

PlanBuilder.ModifyPlan plan =

lexicalSearchPlan

.joinFullOuter(

vectorSearchPlan,

op.on(op.fragmentIdCol("fragmentId"), op.fragmentIdCol("vectorsDocId"))

)

.bind(op.as("score", scoreBinding))

.bind(op.as("distance", distanceBinding))

.bind(op.as("hybridScore", op.vec.vectorScore(op.col("score"), op.col("distance"))))

.joinInner(

op.fromView("Article", "Metadata"),

op.on(op.col("contentURI"), op.col("source"))

)

.orderBy(op.desc("hybridScore"))

.limit(FINAL_LIMIT);This hybrid search approach allows you to build your retrieval flow to find both semantically similar concepts and exact matches like proper names and labels. This surfaces the most relevant documents to inform the output of the generative model.

Hybrid Search using Lexical Search and KNN Vector Search

KNN (k-nearest neighbors**)** is an exact method that finds the closest points to a query, but it can be slower for larger datasets. KNN leverages algorithms such as Euclidian distance or Cosine distance to determine the closet point. In certain cases, you may prefer precision over efficiency.

We will take a similar approach to the example above. However, note that in this example, the lexical search is executed first and then the items are re-ranked using the vector score.

Code:

// Build an Optic Query Plan to find the relevant chunks. MarkLogic utilizes TF/IDF by default.

// However, you can configure it to utilize BM25

PlanSearchOptions planSearchOptions = op.searchOptions()

.withScoreMethod(PlanSearchOptions.ScoreMethod.BM25)

.withBm25LengthWeight(0.5);

// The following query plan searches for the matching fragments utilizing the key concepts that have been

// extracted from the knowledge graph. It joins in a view that contains the vector embedding for the chunk

// and re-ranks the results utilizing a hybrid score of cosine similarity and the BM25 score.

PlanBuilder.ModifyPlan plan =

op.fromSearchDocs(andQuery, null, planSearchOptions)

.joinInner(

op.fromView("GenAI", "Chunks", null, op.fragmentIdCol("vectorsDocId")),

op.on(op.fragmentIdCol("fragmentId"), op.fragmentIdCol("vectorsDocId"))

)

.limit(20)

.bind(op.as(op.col("distance"), op.vec.cosine(

op.vec.vector(op.col("embedding")),

op.vec.vector(op.xs.floatSeq(response.content().vector()))

)))

.bind(op.as("hybridScore",

op.vec.vectorScore(op.col("score"), op.col("distance"))

))

.orderBy(op.desc(op.col("hybridScore")))

.limit(5)

.joinInner(

op.fromView("Article", "Metadata"),

op.on(op.col("contentURI"), op.col("source"))

);In systems with larger datasets, it is a good approach to pre-narrow the items you are comparing. The hybrid approach here works well by filtering down the content with the lexical matches.

Reciprocal Rank Fusion (RRF) vs. MarkLogic Vector Score

Reciprocal Rank Fusion or RRF is a method of combining scores from different algorithms. In our example, we use BM25 to determine relevance scores for lexical searches and cosine distance to calculate the similarity between vectors. By themselves, these values are incompatible. RRF takes these scores and combines them in a way that allows items to be sorted. Items that appear in both results sets will appear higher.

The MarkLogic Server vector score function allows for more granular control on inputs. In the code example above, we label it as a “hybrid” score. The vector score formula enhances the lexical-based score by incorporating vector similarity, specifically boosting scores for documents with higher cosine similarity. This approach prioritizes semantically similar documents in search results and is used as an alternative to the RRF method for combining search results.

Providing the New Context to the GenAI

The final part of the RAG workflow will be to execute the query with the GenAI. We will build a new prompt that utilizes our semantic knowledge graph for new terminology and the chunks discovered as part of the search. This will enhance the context for the LLM and provide a more accurate and traceable response.

The search responses need to be converted into a RowSet that LangChain4j can interpret.

Code:

// Convert the row result set to a list of content for langchain4j

RowSet<RowRecord> rows = rowManager.resultRows(plan);

rows.stream().forEach(row -> {

String source = row.getString("source");

String title = row.getString("title");

String abstractText = row.getString("text");

String text = "Title: " + title + " Abstract:" + abstractText;

Metadata metadata = new Metadata();

metadata.put("uri", row.getString("uri"));

metadata.put("title", title);

metadata.put("text", text);

metadata.put("source", source);

metadata.put("score", row.getString("score"));

metadata.put("distance", row.getString("distance"));

metadata.put("hybridScore", row.getString("hybridScore"));

Content content = Content.from(new TextSegment(text, metadata));

chunks.add(content);

});The incoming user query is handled in a controller and leverages the LangChain4j query assistant to add your internal knowledge into the LLM’s context.

Code:

public interface QueryAssistant {

@SystemMessage("You are a researcher. " +

"1. Answer the question in a few sentences." +

"2. Answer the question using text provided." +

"4. Answer the question using chat memory. " +

"5. If you don't know the answer, just say that you don't know. " +

"6. You should not use information outside the context or chat memory for answering."

)

Result<String> chat(@UserMessage String question);

}

Code:

// Build the Query Assistant to interact with the LLM,

QueryAssistant queryAssistant = AiServices.builder(QueryAssistant.class)

.chatLanguageModel(this.chatLanguageModel)

.chatMemory(this.chatMemory)

.retrievalAugmentor(DefaultRetrievalAugmentor.builder()

.contentRetriever(this.progressDataPlatformQueryRetriever)

.queryTransformer(new CompressingQueryTransformer(chatLanguageModel))

.build()

)

.build();

}The final result provides a textual answer to the question along with citational links for the content used to answer the question.

Image 2: Results of hybrid search and use of private data with the GenAI

In the next blog, we’re going to look at how to integrate this flow with the chatbot in your React application’s middle tier.

To explore the full process for designing a RAG workflow to enhance the accuracy of your LLM responses, download our Semantic RAG whitepaper.

Continue reading...