D

Drew Wanczowski

Guest

This is part two of our semantic RAG series, where we dive into the value of knowledge graphs and how to build one. Be sure to read part one first: Enhancing GenAI with Multi-Model Data and Knowledge Graphs.

Utilization of knowledge graphs has been on the rise. Originally developed by Google, they help describe the world in clear, concise terminology. Organizations leverage knowledge graphs as part of a semantic layer to help add context to other applications and further inform users about topics of interest.

Knowledge graphs are comprised of interconnected objects and facts. For example, the statement High Fructose Corn Syrup is a Sweetening Agent expresses the relationship between a subject and an object. It is a statement that reflects a real-world fact. Knowledge graphs can mimic business processes, real-life physical objects or even domains of study like material science.

In the context of retrieval-augmented generation (RAG), knowledge graphs bring tremendous value. These networked facts can be used to interpret user input, provide context to the AI and aid in data discovery. This is particularly helpful when people use different words to express the same idea.

For example, the hormone “glucagon-like peptide-1” can be stored in the knowledge graph as the preferred label for that concept. However, it is commonly referred to by the abbreviation “GLP-1.” Additionally, there are contexts, like a country or a research community, where the same concept is referenced in different ways. In some regions, the terms for isoglucose—High Fructose Corn Syrup and Glucose-fructose syrup—are used interchangeably.

By encoding these cases in your knowledge graph, you can supplement the knowledge of the AI with your proprietary information.

Knowledge graphs can be built in many ways. The Progress Semaphore platform has an interactive user interface that can be leveraged by subject matter experts (SMEs) to build knowledge models. These models consist of topics and concepts that describe a particular knowledge domain. Knowledge models can be imported from public domain ontologies and taxonomies, controlled vocabularies, such as product catalogs, or defined collaboratively by your SMEs. One common misconception is that knowledge models need to be created from scratch. We will walk through a few ways that you can leverage available information to build your models.

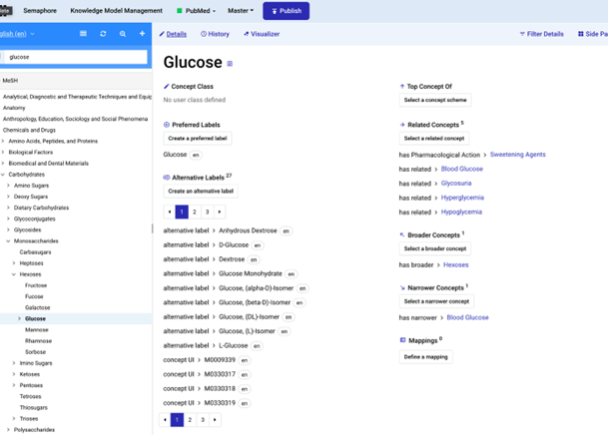

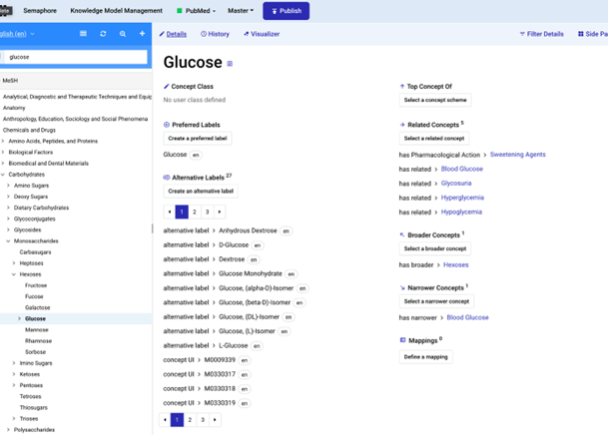

The first common way to jump-start knowledge model creation is to use an industry-standard model. The example illustrated below is an import of the NIH Medical Subject Headings (MeSH) . This is an industry-standard model used for classifying medical research articles. Many industries have publicly available ontologies or taxonomies, such as FIBO for the Finance, IPTC Media Topics for news publishing or the large linked data set provided by the Library of Congress. These models can be imported into the Semaphore knowledge model manager as a basis of your model or linked to an existing one to expand your own internal knowledge.

Image 1: Managing concepts of a knowledge model in the Semaphore platform

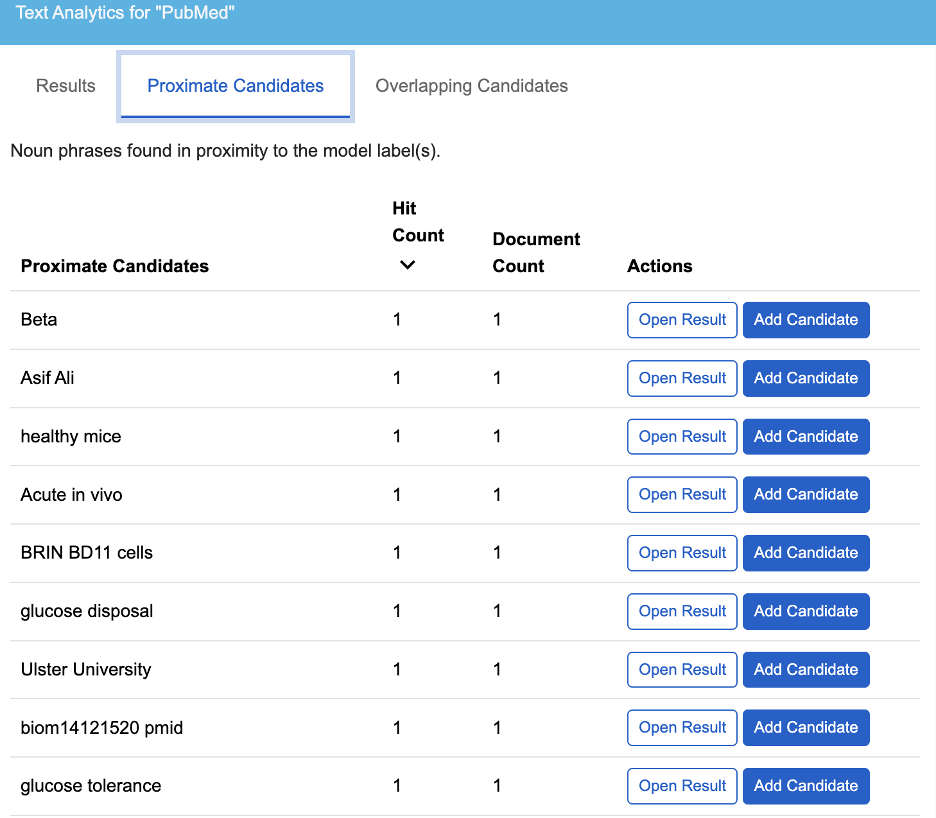

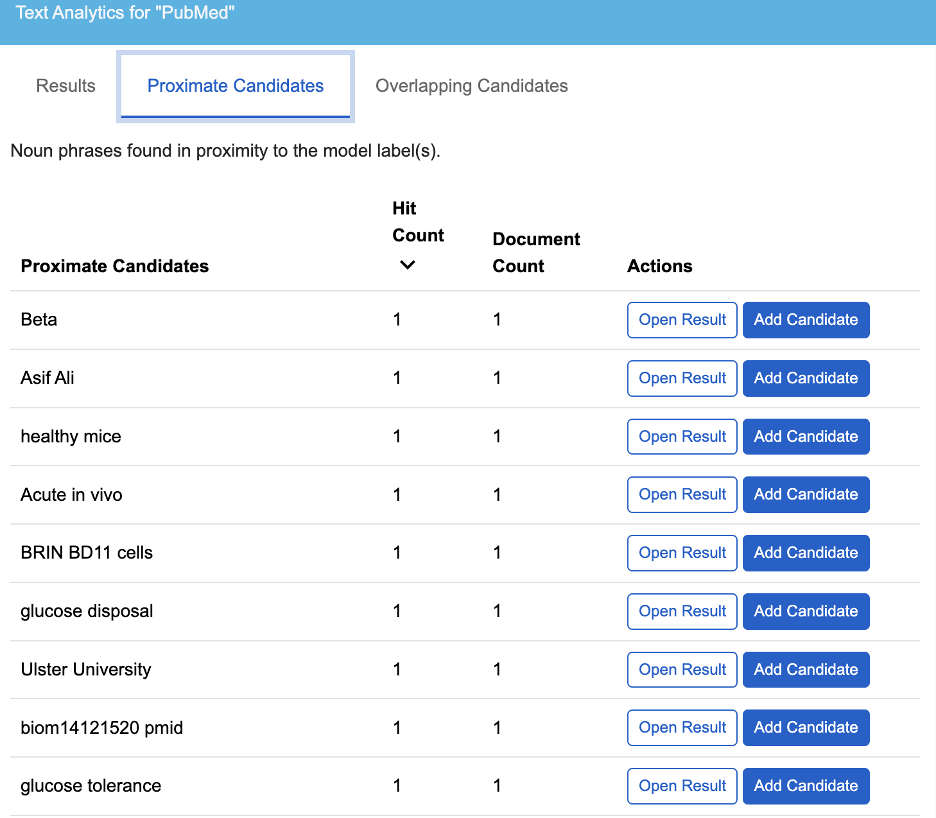

In addition to the above process, we can use the Semaphore Natural Language Processing (NLP) framework to mine documents for concepts. Organizations sit on large corpuses of data that contain long-form text, information about their processes and supporting information to run the business. The data mining process can be leveraged to look for named entities and noun phrases that can be distilled down to a knowledge model. From these reports, you can incorporate candidate concepts into your model.

Image 2: A table of noun phrases and entities from text mining

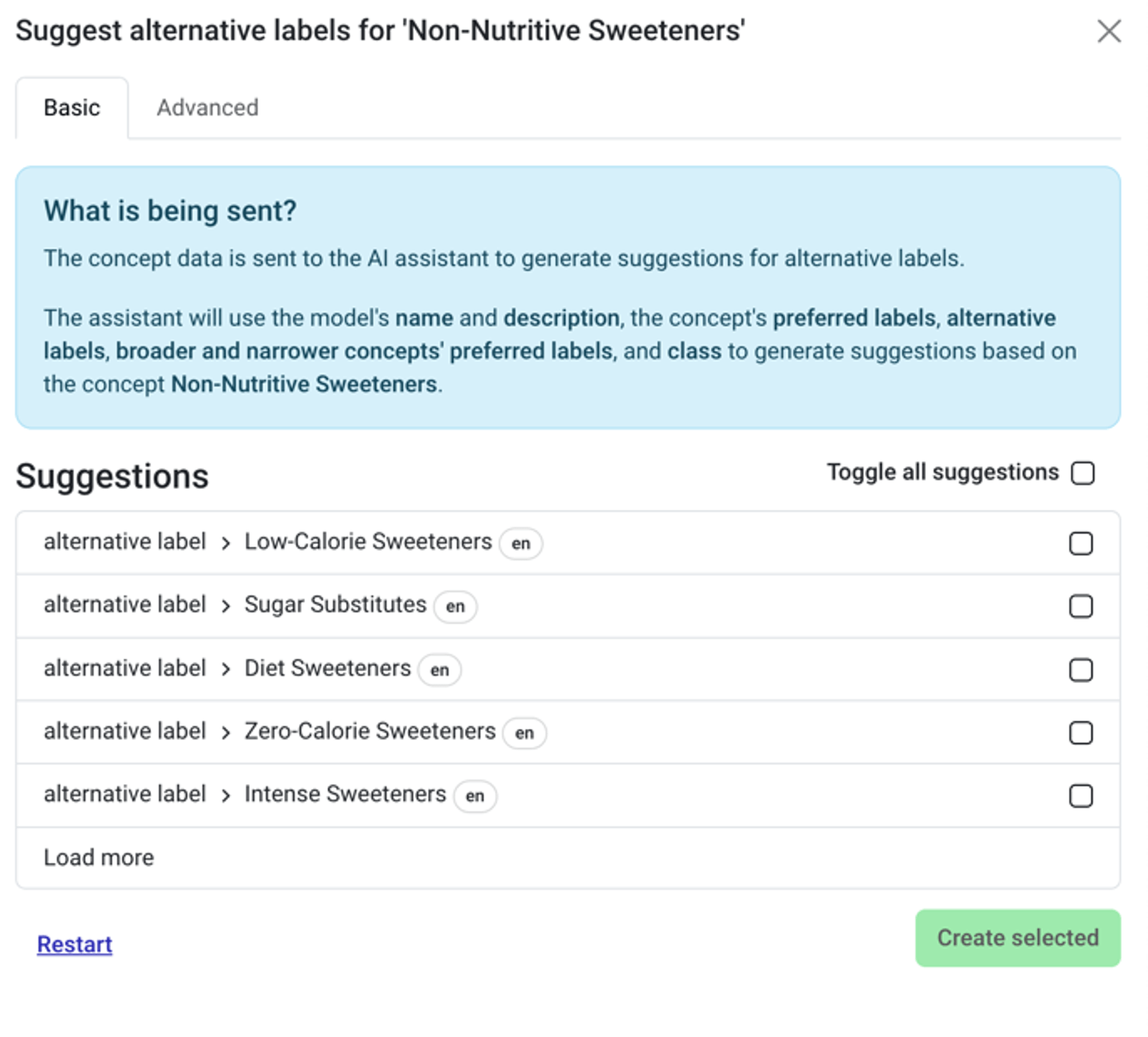

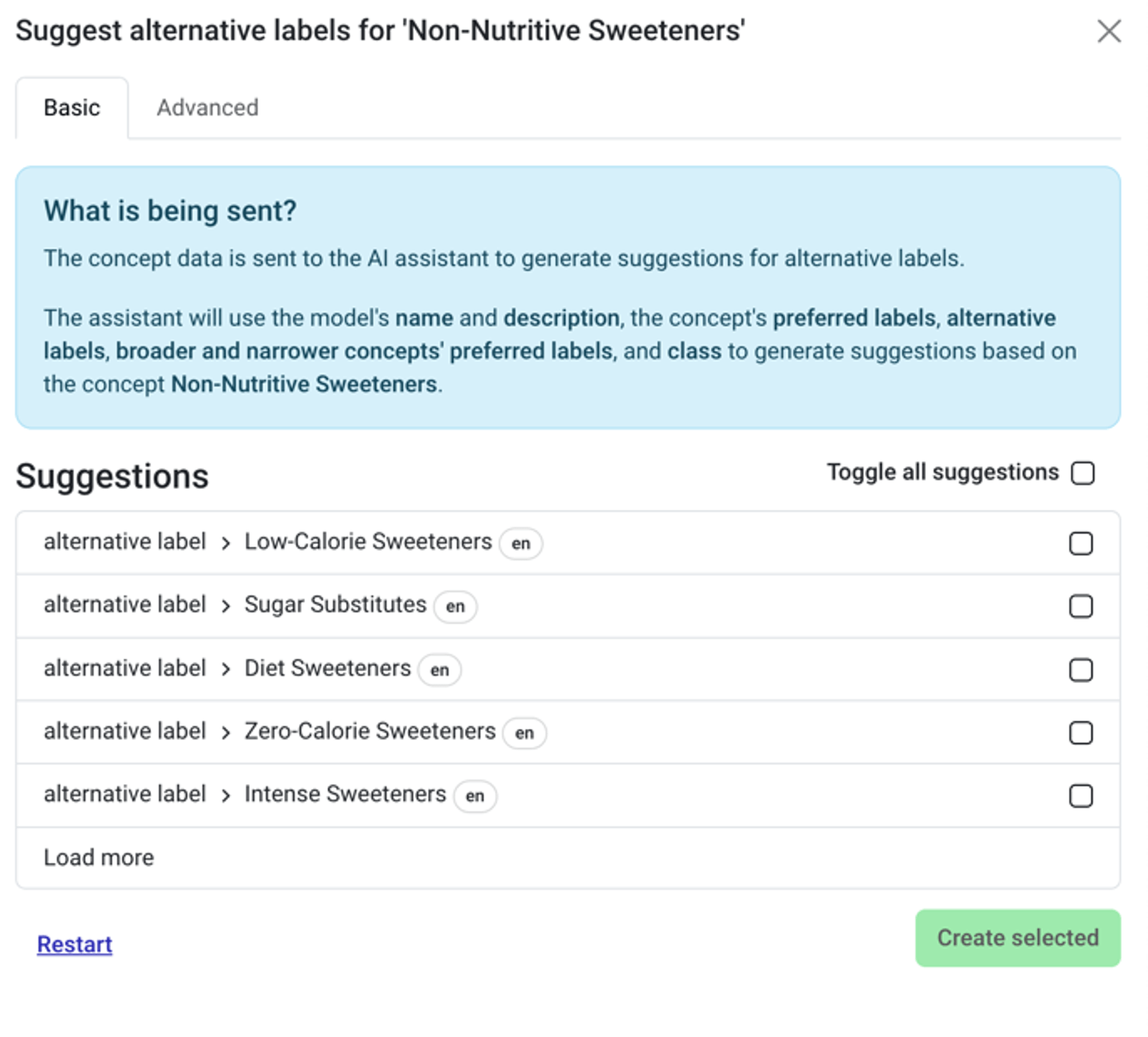

While your corpus of data, now mapped in a knowledge graph, contains valuable information, you may want to look to external sources to complement and enrich your model. Semaphore Studio contains GenAI integrations to aid in the development of your knowledge models.

To get started, select a concept in your scheme. Within the concept, you can leverage GenAI to produce additional labels, add narrower concepts and provide additional metadata.

Image 3: Adding additional labels to a concept through GenAI

Image 4: Adding narrower concepts through GenAI

Depending on the Large Language Model, you may want to customize your prompt. The Semaphore platform also allows you to customize this prompt to generate a more concise list of candidates.

Image 5: Customize the LLM prompt for concept generation

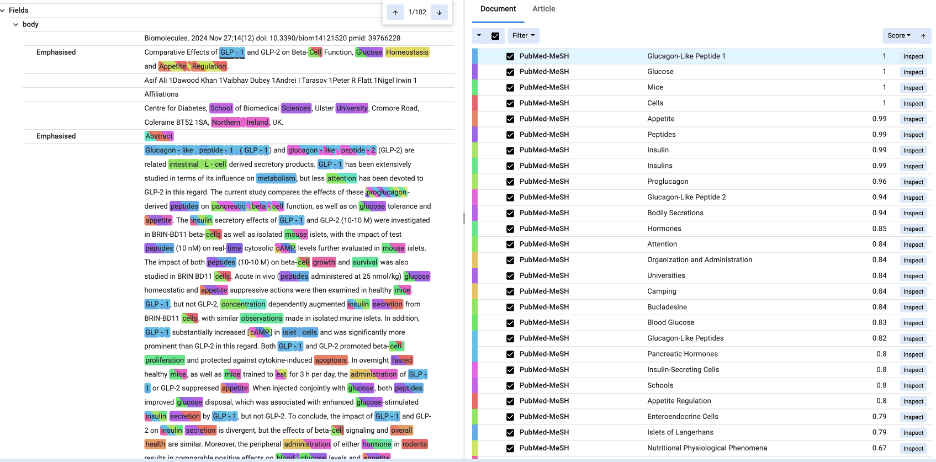

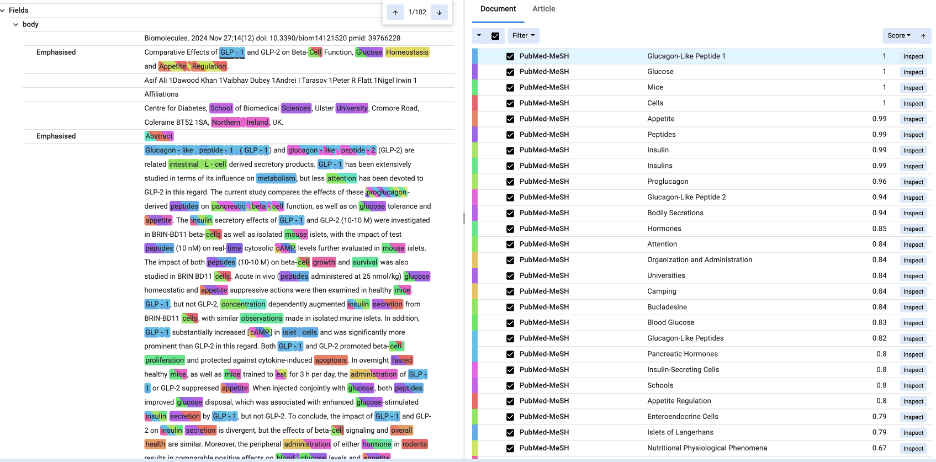

Now that you have a richer knowledge model, the next step is to publish the model to the Semaphore Classification Service . Classification will use the NLP engine along with rulesets generated from the knowledge model. Prior to running data through an automated flow, you can verify the classification using the Document Analyzer tool. This will take text and use the models to classify the content. The model has found matches for concepts in the knowledge model and has also identified concepts based on the supporting information in the text. Note, the text does not need to reference the concept label directly for a concept to be identified. If enough supporting information is found, it will classify the text with the pertinent concept.

Image 6: Classification results identifying key concepts

You now have a well-developed knowledge model and classification strategy. The work demonstrated here has been done by a subject matter expert through the Semaphore platform’s intuitive user interface. The later posts will cover how to leverage APIs to interact with the model and classifications at scale, so you can continue preparing your content for consumption.

To explore the full process for designing a RAG workflow to enhance the accuracy of your LLM responses, download our Semantic RAG whitepaper.

Download Whitepaper

Continue reading...

Utilization of knowledge graphs has been on the rise. Originally developed by Google, they help describe the world in clear, concise terminology. Organizations leverage knowledge graphs as part of a semantic layer to help add context to other applications and further inform users about topics of interest.

Knowledge graphs are comprised of interconnected objects and facts. For example, the statement High Fructose Corn Syrup is a Sweetening Agent expresses the relationship between a subject and an object. It is a statement that reflects a real-world fact. Knowledge graphs can mimic business processes, real-life physical objects or even domains of study like material science.

In the context of retrieval-augmented generation (RAG), knowledge graphs bring tremendous value. These networked facts can be used to interpret user input, provide context to the AI and aid in data discovery. This is particularly helpful when people use different words to express the same idea.

For example, the hormone “glucagon-like peptide-1” can be stored in the knowledge graph as the preferred label for that concept. However, it is commonly referred to by the abbreviation “GLP-1.” Additionally, there are contexts, like a country or a research community, where the same concept is referenced in different ways. In some regions, the terms for isoglucose—High Fructose Corn Syrup and Glucose-fructose syrup—are used interchangeably.

By encoding these cases in your knowledge graph, you can supplement the knowledge of the AI with your proprietary information.

Designing a Knowledge Graph

Knowledge graphs can be built in many ways. The Progress Semaphore platform has an interactive user interface that can be leveraged by subject matter experts (SMEs) to build knowledge models. These models consist of topics and concepts that describe a particular knowledge domain. Knowledge models can be imported from public domain ontologies and taxonomies, controlled vocabularies, such as product catalogs, or defined collaboratively by your SMEs. One common misconception is that knowledge models need to be created from scratch. We will walk through a few ways that you can leverage available information to build your models.

The first common way to jump-start knowledge model creation is to use an industry-standard model. The example illustrated below is an import of the NIH Medical Subject Headings (MeSH) . This is an industry-standard model used for classifying medical research articles. Many industries have publicly available ontologies or taxonomies, such as FIBO for the Finance, IPTC Media Topics for news publishing or the large linked data set provided by the Library of Congress. These models can be imported into the Semaphore knowledge model manager as a basis of your model or linked to an existing one to expand your own internal knowledge.

Image 1: Managing concepts of a knowledge model in the Semaphore platform

In addition to the above process, we can use the Semaphore Natural Language Processing (NLP) framework to mine documents for concepts. Organizations sit on large corpuses of data that contain long-form text, information about their processes and supporting information to run the business. The data mining process can be leveraged to look for named entities and noun phrases that can be distilled down to a knowledge model. From these reports, you can incorporate candidate concepts into your model.

Image 2: A table of noun phrases and entities from text mining

Enriching the Knowledge Graph Through GenAI

While your corpus of data, now mapped in a knowledge graph, contains valuable information, you may want to look to external sources to complement and enrich your model. Semaphore Studio contains GenAI integrations to aid in the development of your knowledge models.

To get started, select a concept in your scheme. Within the concept, you can leverage GenAI to produce additional labels, add narrower concepts and provide additional metadata.

Image 3: Adding additional labels to a concept through GenAI

Image 4: Adding narrower concepts through GenAI

Depending on the Large Language Model, you may want to customize your prompt. The Semaphore platform also allows you to customize this prompt to generate a more concise list of candidates.

Image 5: Customize the LLM prompt for concept generation

Now that you have a richer knowledge model, the next step is to publish the model to the Semaphore Classification Service . Classification will use the NLP engine along with rulesets generated from the knowledge model. Prior to running data through an automated flow, you can verify the classification using the Document Analyzer tool. This will take text and use the models to classify the content. The model has found matches for concepts in the knowledge model and has also identified concepts based on the supporting information in the text. Note, the text does not need to reference the concept label directly for a concept to be identified. If enough supporting information is found, it will classify the text with the pertinent concept.

Image 6: Classification results identifying key concepts

You now have a well-developed knowledge model and classification strategy. The work demonstrated here has been done by a subject matter expert through the Semaphore platform’s intuitive user interface. The later posts will cover how to leverage APIs to interact with the model and classifications at scale, so you can continue preparing your content for consumption.

To explore the full process for designing a RAG workflow to enhance the accuracy of your LLM responses, download our Semantic RAG whitepaper.

Download Whitepaper

Continue reading...